Immersive AR-powered shopping for quick checkout and product insights.

FastLane raised over $30k from Snap Inc. to create AR experience to eliminate checkout lines by automatically detecting picked-up products, while providing hyper-personalised product recommendations and real-time reviews to enhance the in-store shopping experience.

Client/Company

https://www.spectacles.com/?lang=en-US

My Role

Founder

Lead Designer

Lead Engineer (AR & Full-stack)

Collaborators

Snap Inc. Spectacles Team

Duration

March ‘25 - Present

Identifying retail pain points through market analysis

When you go to any store to buy things, you have often faced more than one of these problems

You wonder if it’s the right product for you

You have trouble finding things

You spent more than 10 minutes at checkout

What do Shoppers and Stores Need?

- 75% shoppers want personalised product recommendations

- Companies that excel in personalisation generate up to 40% more revenue

- Over 50% shoppers leave if they have to queue for more than 7 mins

- 75% shoppers cannot find desired items leading to losses of $8.1B annually

Why are current solutions not working?

- Limited visibility into customer journey, dwell time and actions at product/aisle level

- Lack of product insights at aisle-level

Why is AR not everywhere yet?

- Expensive hardware without compelling use cases.

- Interactions mimic screens and not the real world.

- Without haptic feedback, users experience imprecise interactions, leading to frustration and increased fatigue.

- Voice input is an underrated and under-used input for AI chat.

*Data collected from Independent Research & User Testing as well as BCG and McKinsey studies

Hardware: Snap Spectacles 2025

Lightweight AR glasses featuring see-through lenses, dual processors, AI integration, and spatial computing capabilities, launching publicly in 2026 for consumer use.

- See-Through Waveguide Displays with 46 Field of View

- Hand Tracking and Voice Command Control

- 30-40 min battery life

- Uses LensStudio for building applications

Technical Constraints for Design

- Screens working on light projection cannot show dark colours or blur background, making creating contrast with white text very difficult

- FoV is too small, allowing only for a vertical design (Elements in the center would obstruct user’s view)

From gesture controls to contextual intelligence

V1 Design and Testing Insights

The first iteration aimed to provide a gesture-based interface using swipes in the air for adding and removing from cart. We showed only the basic information about the product like name and price and customer reviews.

Issues

- Actions were not unique enough, triggering false positives keeping accuracy >60%

- Customer reviews weren’t enough to answer “Is this product right for me”

Converting product confusion into confident purchases

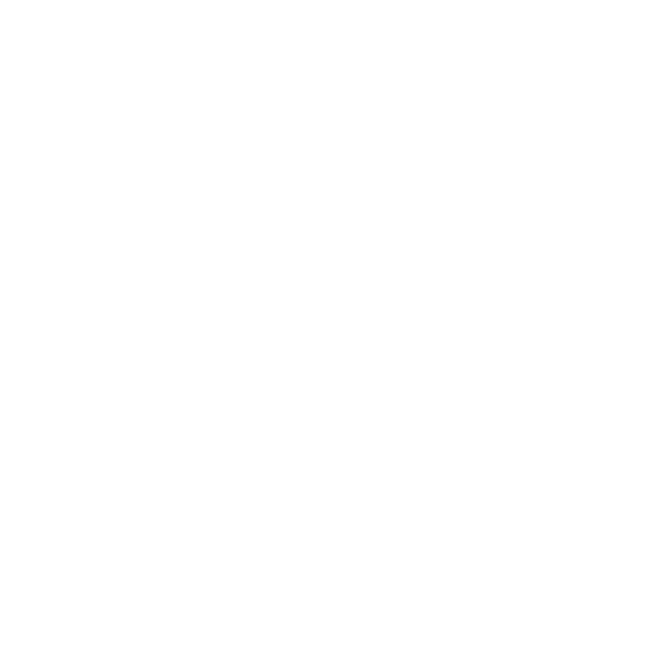

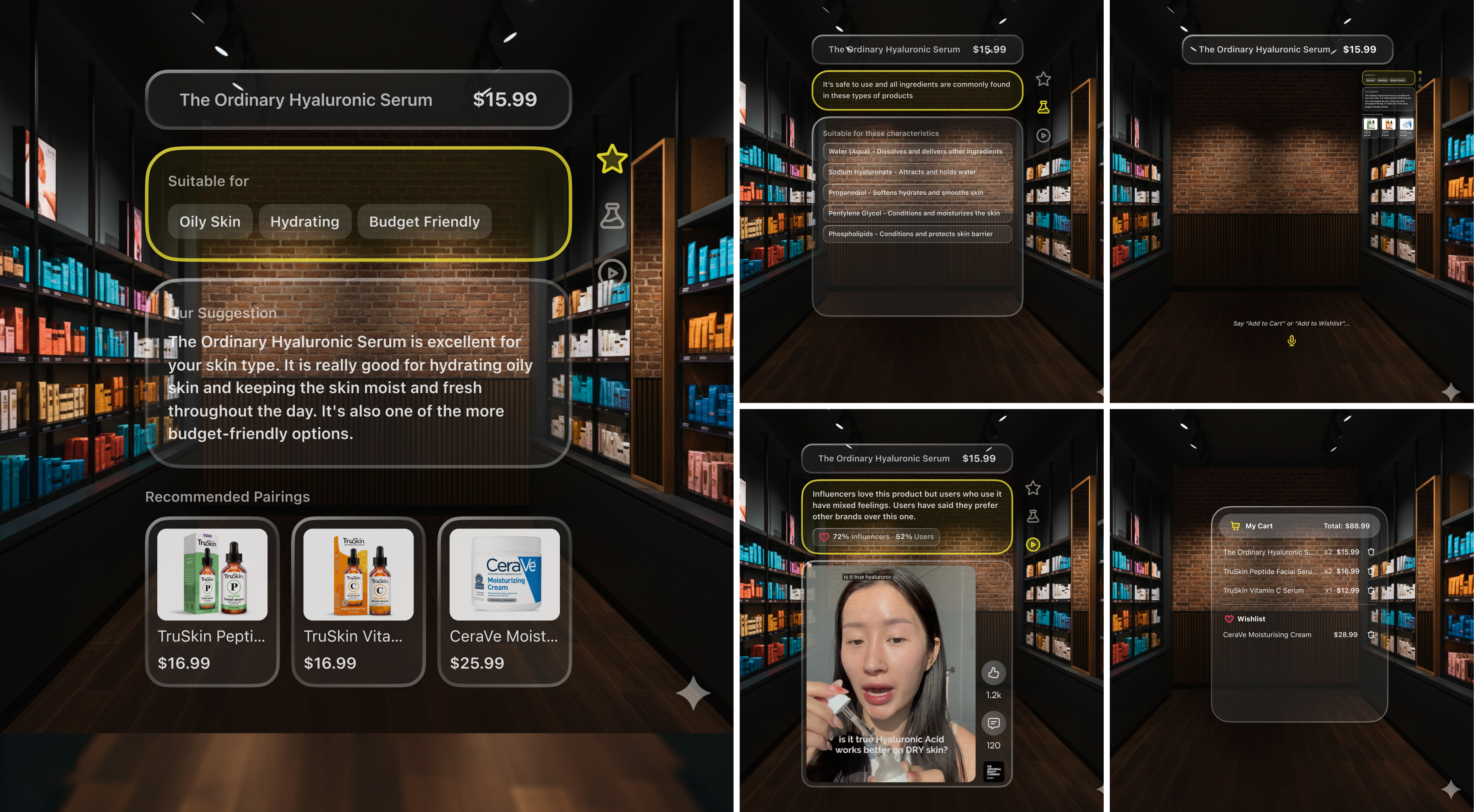

Modern Minimalistic UI with Natural Gestures and Voice Commands

The v2.0 prototype built on top of the v1.0 addresses all of it’s concerns. We add a tonne of information while keeping the interactions seamless.

Pairing with Phone

Users can walk into the store, pick up the glasses and pair using a companion app on their phone.

Product Recognition + Ai Conversation

Product hold triggers instant data fetch—AI recommendations, ingredients, profile insights. Voice command "Hey FastLane" activates contextual AI assistant with complete user and product context.

Note: AI responses are internal hence not clear but can be heard faintly

Auto-minimise UI

Product details dynamically scale to minimize UI clutter during real-world focus.

Recommendation Details + Youtube Shorts

Answers "Is this right for me?" via: AI-matched recommendations, ingredient alerts, review synthesis, and influencer short-form videos.

Note: Youtube shorts were not screen recorded but can be heard faintly

Voice Controlled Cart Actions

Voice commands manage cart while preventing action recognition false positives—naturally supports phrase variations.

Interactive Cart Window

This window allows user to glance and update their cart and wishlist seamlessly.

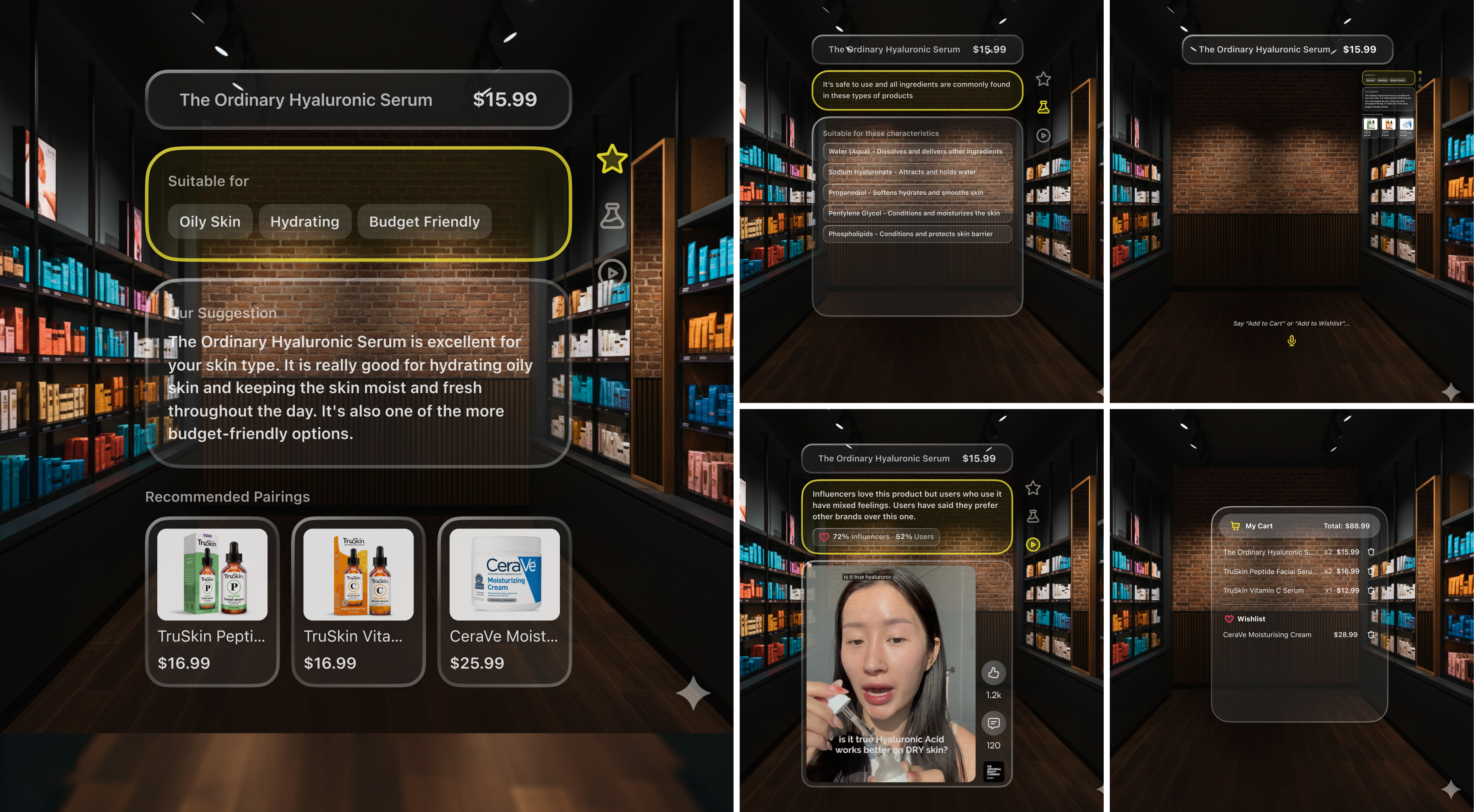

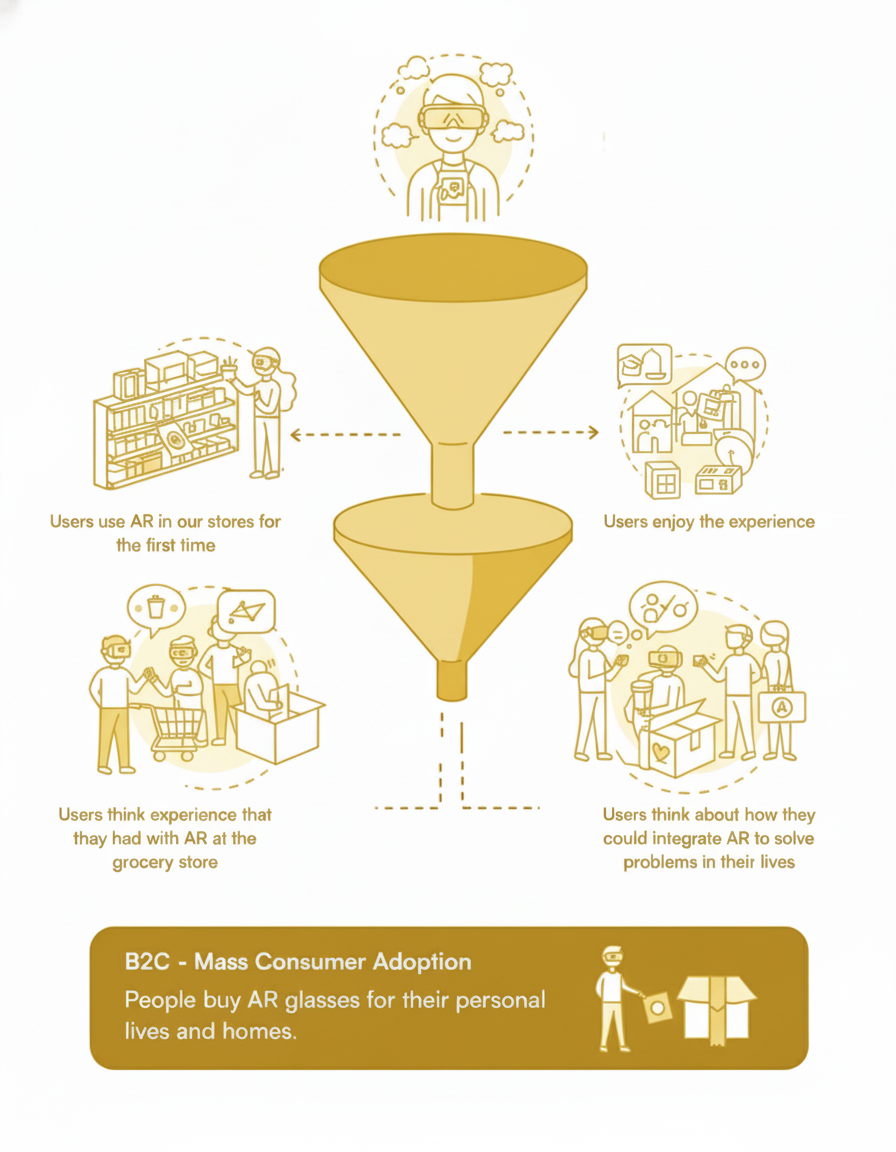

Retailers first, then direct consumer adoption

- B2C adoption is too slowWaiting for mass consumer AR adoption delays market entry.

- Stores buy the glasses, not customersJust like shopping carts, users borrow AR glasses while shopping.

- Retailers gain efficiency & salesFaster checkout, fewer employees, shorter turn-around-time, and more footfall.

- Path to consumer ownershipOnce people experience AR shopping, they may want it at home—just like personal computers.

This mirrors how PCs gained adoption—starting in workplaces before reaching consumers. I explored this idea further in this LinkedIn post.

Balancing interaction naturalism with hardware constraints

Learnings

AR display constraints taught me that contextual intelligence matters more than comprehensive data. Combining gesture and voice controls provided necessary accuracy. Most importantly, UI must fade into the background—serving as a silent assistant enabling users to focus on the physical world.

Future Scope

Next, we're developing natural gesture recognition using computer vision to recognize shopping behaviors without voice commands. We're also exploring expanded categories beyond beauty, real-time inventory integration, AR navigation, and a B2B analytics dashboard for retailers to understand shopper behavior and engagement patterns.

Get In Touch!

apharkare@gmail.com

Let’s Talk

Download Resume

Home

Work

About

© Aarya Harkare 2025. All Rights Reserved.

Let’s Talk

FastLane

Fastlane

FinBox

Atelier

Other Projects

Immersive AR-powered shopping for quick checkout and product insights.

Introduction

Research and Insights

Hardware

Iterations and testing

Final design

Business Strategy

Learnings and next steps

FastLane raised over $30k from Snap Inc. to create AR experience to eliminate checkout lines by automatically detecting picked-up products, while providing hyper-personalised product recommendations and real-time reviews to enhance the in-store shopping experience.

Client/Company

https://www.spectacles.com/?lang=en-US

My Role

Founder

Lead Designer

Lead Engineer (AR & Full-stack)

Collaborators

Snap Inc. Spectacles Team

Duration

March ‘25 - Present

Identifying retail pain points through market analysis

When you go to any store to buy things, you have often faced more than one of these problems

You wonder if it’s the right product for you

You have trouble finding things

You spent more than 10 minutes at checkout

What do Shoppers and Stores Need?

- 75% shoppers want personalised product recommendations

- Companies that excel in personalisation generate up to 40% more revenue

- Over 50% shoppers leave if they have to queue for more than 7 mins

- 75% shoppers cannot find desired items leading to losses of $8.1B annually

Why are current solutions not working?

- Limited visibility into customer journey, dwell time and actions at product/aisle level

- Lack of product insights at aisle-level

Why is AR not everywhere yet?

- Expensive hardware without compelling use cases.

- Interactions mimic screens and not the real world.

- Without haptic feedback, users experience imprecise interactions, leading to frustration and increased fatigue.

- Voice input is an underrated and under-used input for AI chat.

*Data collected from Independent Research & User Testing as well as BCG and McKinsey studies

Hardware: Snap Spectacles 2025

Lightweight AR glasses featuring see-through lenses, dual processors, AI integration, and spatial computing capabilities, launching publicly in 2026 for consumer use.

- See-Through Waveguide Displays with 46 Field of View

- Hand Tracking and Voice Command Control

- 30-40 min battery life

- Uses LensStudio for building applications

Technical Constraints for Design

- Screens working on light projection cannot show dark colours or blur background, making creating contrast with white text very difficult

- FoV is too small, allowing only for a vertical design (Elements in the center would obstruct user’s view)

From gesture controls to contextual intelligence

V1 Design and Testing Insights

The first iteration aimed to provide a gesture-based interface using swipes in the air for adding and removing from cart. We showed only the basic information about the product like name and price and customer reviews.

Issues

- Actions were not unique enough, triggering false positives keeping accuracy >60%

- Customer reviews weren’t enough to answer “Is this product right for me”

Converting product confusion into confident purchases

Modern Minimalistic UI with Natural Gestures and Voice Commands

The v2.0 prototype built on top of the v1.0 addresses all of it’s concerns. We add a tonne of information while keeping the interactions seamless.

Pairing with Phone

Users can walk into the store, pick up the glasses and pair using a companion app on their phone.

Product Recognition + Ai Conversation

Product hold triggers instant data fetch—AI recommendations, ingredients, profile insights. Voice command "Hey FastLane" activates contextual AI assistant with complete user and product context.

Note: AI responses are internal hence not clear but can be heard faintly

Auto-minimise UI

Product details dynamically scale to minimize UI clutter during real-world focus.

Recommendation Details + Youtube Shorts

Answers "Is this right for me?" via: AI-matched recommendations, ingredient alerts, review synthesis, and influencer short-form videos.

Note: Youtube shorts were not screen recorded but can be heard faintly

Voice Controlled Cart Actions

Voice commands manage cart while preventing action recognition false positives—naturally supports phrase variations.

Interactive Cart Window

This window allows user to glance and update their cart and wishlist seamlessly.

Retailers first, then direct consumer adoption

- B2C adoption is too slowWaiting for mass consumer AR adoption delays market entry.

- Stores buy the glasses, not customersJust like shopping carts, users borrow AR glasses while shopping.

- Retailers gain efficiency & salesFaster checkout, fewer employees, shorter turn-around-time, and more footfall.

- Path to consumer ownershipOnce people experience AR shopping, they may want it at home—just like personal computers.

This mirrors how PCs gained adoption—starting in workplaces before reaching consumers. I explored this idea further in this LinkedIn post.

Balancing interaction naturalism with hardware constraints

Learnings

AR display constraints taught me that contextual intelligence matters more than comprehensive data. Combining gesture and voice controls provided necessary accuracy. Most importantly, UI must fade into the background—serving as a silent assistant enabling users to focus on the physical world.

Future Scope

Next, we're developing natural gesture recognition using computer vision to recognize shopping behaviors without voice commands. We're also exploring expanded categories beyond beauty, real-time inventory integration, AR navigation, and a B2B analytics dashboard for retailers to understand shopper behavior and engagement patterns.

Get In Touch!

apharkare@gmail.com

or

Let’s Talk

Download Resume

Home

Work

About

© Aarya Harkare 2025. All Rights Reserved.